The Ethics of Modern Communication

A BlogBook is a series of posts intended to be weaved into a single book-length essay.

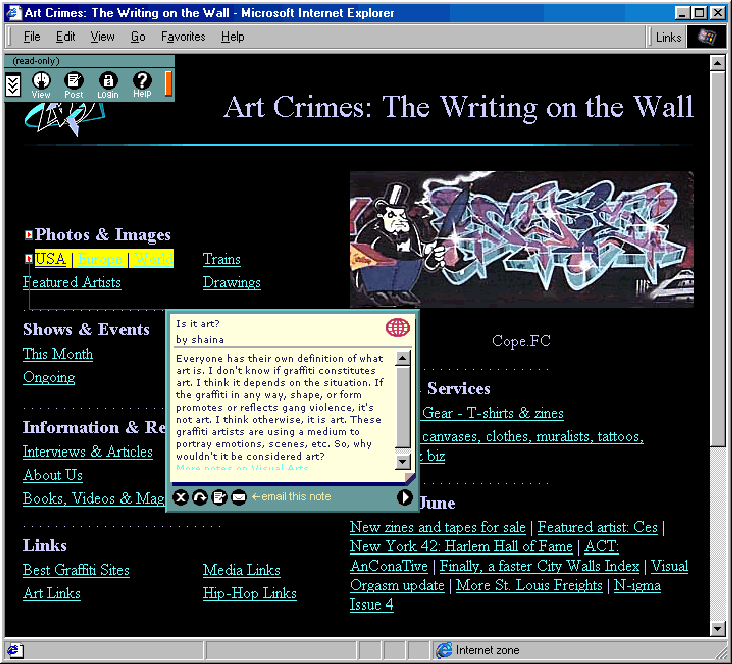

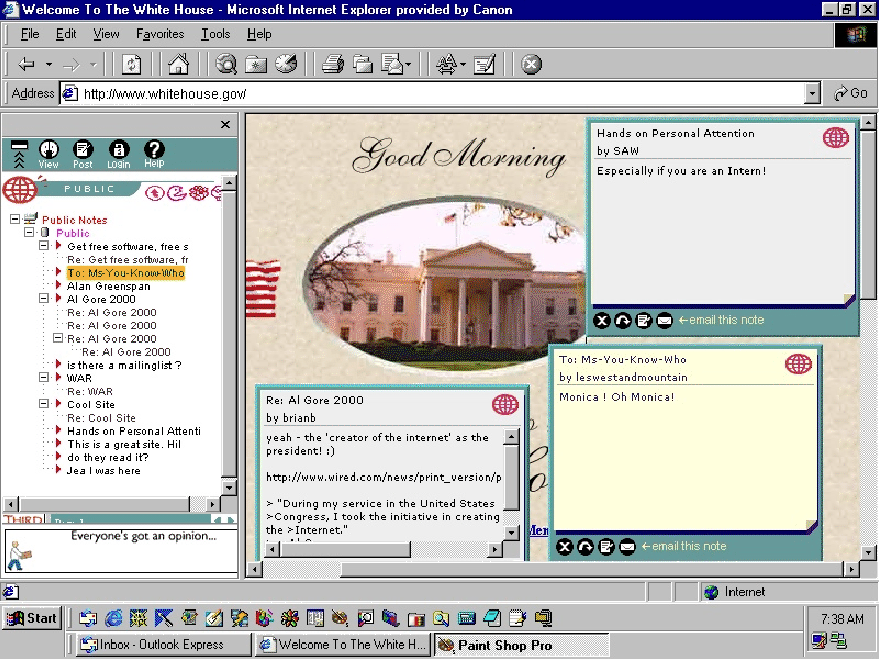

I wrote this book around the year 2000 and 2001, to cover the ever-mutating field of the Internet in terms of the ethics of such communication, particularly as it focuses on copyright and related issues.

While the writing style could use some improvement, I find that I still largely stand by everything I wrote 20 years ago. The biggest change that I would include is something to address the increasingy hostility of the internet environment. While this essay works tends to point in the direction of ad blocking and similar technologies not being very ethical on theoretical grounds, in practice I think they’re almost a necessity for security reasons nowadays.

But otherwise, I think this largely holds up. Despite the superficial frantic changes in the Internet world, this all still makes sense.

In 2022, a new challenger is arising in the form of AIs that can consume the entire Internet (more or less) and then be used to produce “novel” content. Especially in the image AI space, this is getting a lot of people wondering about the copyright status of the output of such systems. As I write this on the tail end of 2022, I think people are finding themselves working around to the same conclusions I came to 20 years ago; if you slurp up that much content just to feed your AI you must be considered to be deriving from all those sources. An emerging story to be sure.

Communication Ethics book part for Prelude. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

I've been particularly interested in how the Internet is affecting law and society for several years now. I've seen a lot of debating, litigating, and legislating, but there is little or no consistency in the rhetoric.

In the past, we've had legal frameworks that allowed us to approach these issues with some degree of consistency. Copyright was built on an ethical framework built on certain simple ethical principles: A creator should be given the opportunity to benefit from his work. A creator deserves credit for his work. The market should be grown. A created work must eventually be released back to society, as the goodness of public ownership exceeds the goodness of continued private ownership for the society. These and other simple principles are the foundation of copyright law, even if we have not followed them perfectly.

But communication has changed radically since the precepts in the previous paragraph were first propounded. In all the debating, litigating, and legislating, virtually nobody has examined the ethical foundation of modern communications. Is it any wonder that the resulting legislation and court decisions have been largely garbage?

This essay exists to correct that major oversight. Indeed, the situation has changed, and due to the complicated nature of modern communication technologies, attempting to create an ethical foundation is decidedly non-trivial. This is not to say that the foundations we built the current legal system on have somehow become irrelevant, but how the principles play out in real life is no longer obvious. Rather than starting with a desired conclusion and making sure I end up with them, I examine the situation afresh, hopefully shedding light in corners you didn't even realize existed. In many cases I myself was surprised by the results, particularly by the Death of Expression chapter, when something I thought would be easily salvagable turned out to be a complete loss. The final goal of this essay is to try to construct such an ethical foundation, and from it derive some sort of useful framework for thinking about the ethical problems, leaving the final construction of the legal framework to Congress and the courts.

I do not respect a person, group, or ideology that defines itself solely in opposition to something. Opposition should flow from positive opinion about what should be that happens to contradict somebody else's ideas. To respect my own opinions about communication issues, I feel it is necessary to thus propose a system that describes how we should be doing things, because it's simply too easy to take potshots at an existing system when you have no responsibility to replace it. It is also too easy to paint one's self into a corner when one is simply being critical of things; without a coherent vision, it's easy to end up accidentally contradicting one's self, which I believe has happened many times, even by groups like the EFF.

I run a weblog called iRi, which is descended from a weblog tracking these issues and trying to synthesize all the various stuff that's happened over the last few years into some sort of positive, cohesive whole. It became clear to me quickly that the weblog format was insufficient for the task. There is so much to cover that it simply cannot be done in small chunks, because there is so much context I need to lay down. So instead of trying to lay this out in a long series of weblog posts, I started writing this essay. As you'll see, each chapter builds on the last and there's a high degree of cohesion in this essay; trying to post it in little chunks would never have worked. Now that this essay has been written, I have stopped posting so much stuff about these topics as I feel it is redundant to what I have written here.

I do not ask you to swallow my views unchallenged. Indeed, I encourage dissension; the odds of this essay being perfectly correct are small. But I hope this will stimulate you to think about these issues in new and hopefully profitable ways. There are ideas in here that I have not seen anyone talk about, even in the years of debate I've been watching, and even in the years it has taken me to write this essay, and the years it has been sitting here largely completed. Even a year after the first publish date I think it is still ahead of its time.

This essay alternatively talks pure ethical theory and about legal concepts, both current and future. The connection is that law is always used in this essay as "applied ethics". In the real world, it is not always the case that laws reflect applied ethics; one need not search too hard for laws that seem to be applied corruption. But we will treat the law in this manner, especially as the ethical "action" of the law is often more revealing then the "words" of theory, and transitions will not be labelled, as that would cause too much bloat.

Who This Is For

This essay was written in the United States, about the United States. All references to "this government" and "the people" refer to the United States Government and United States citizens. Conditions in your country may vary... but as I am talking ethical theory here, the contents of this essay still apply.

Communication Ethics book part for The Conventional View of Communications. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

The first question that needs to be answered is, "Is there any need to re-analyze the ethical situation? Can our current legal/ethical framework handle the challenges posed by modern communication technology?"

In this chapter, I'm going to cruise through history and extract an overview of the development of the concepts that the legal system has developed to handle various issues as they arose. We will re-examine the history of content distribution and the history of copyright principles, and draw some connections between them, both traditional and novel. We will examine how we lost track of the ethics of copyright and got stuck in the trap of believing that ad-hoc, expedient solutions were instead immutable wisdom of the ages. In the end it should be clear that the introduction of the Internet and associated technologies constitute a qualitative change in communication technology that will require extensive further refinements

This is a high-level summary, not an enumeration of the thousands of details added over the years. The intention is to lay the groundwork for a demonstration of the deep flaws in the current system. Because the conventional view freely mixes laws and ethics, this chapter will not go to great lengths to separate them either.

I'm going to assume that the readers of this essay are already familiar with both the justifications for intellectual property and free speech, and the basic historical reasons for the creation of each of those, and do not need Yet Another (Probably Oversimplified Anyhow) Explanation of why Gutenberg's printing press more-or-less caused the creation of the concept of copyright. (Again, this is already long enough without rewriting that yet again.) Therefore, since you know the basics of the conventional view of copyright, we can focus on synthesizing a new and better understanding of communication issues, rather then re-iterating conventional understandings.

Communication Ethics book part for Definition of Information. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Information is used loosely in this essay to mean anything that can written on some medium and transmitted somehow to another person. Writing, sculpture, music, anything at all. Information can be communicated, which will be more carefully defined later. Yes, this is broad, but there is a rule of thumb: If it can be digitized, it's information.

Note that digitization is very, very powerful. While few people may own the equipment to do it, there is no theoretical difficulty in digitizing sculpture, scent, motions, or many other things people may not normally consider digitizable. Even things like emotions can be digitized; psychiatrists ask their patients to do so all the time ("Describe how anxious you're feeling right now on a scale of 1 to 10."). While something like a written letter may be fully analog, one can generally create some digital representation that will represent the letter satisfactorily, such as scanning the whole letter and sending the image file.

"Communication" is simply the transfer of information from one entity to another. The details of that transfer matter, and it's worthwhile to examine the history of those details.

Communication Ethics book part for Historical Overview of Information Transmission. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Reduced to a sentence, Gutenberg's printing press's primary effect on information reproduction was to make the production of words relatively cheap. For the first time in history, the effort required to make a copy of a textual work was many times less then the effort required to create the original copy, thus making the production model of "Make an original copy of a book, then print thousands of copies of it quickly for a profit" practical.

Ever since then, technology's primary effect is to lower the cost of various production models with various media until they are practical for an increasing number of people. Every major challenge to intellectual property law has come from this fundamental effect.

Communication Ethics book part for Hand Copying. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

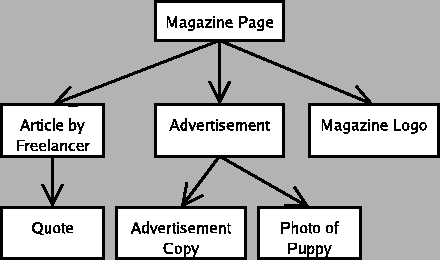

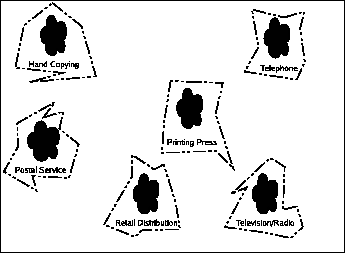

Let's create a graph to look at how various parameters affect the ability to do something profitably, examined over time. (Though not strictly in chronological order as it is often difficult to place a date on when a given technology became truly practical.)

|

You'll note there's only one black-dot, which is hand-copying material. You'll also note that the graph has no axis labels. This is because there are, in reality, a lot of things that could go on those labels, like cost-per-copy (extremely high), time per copy (a lot), number of customers waiting for product, etc., and there are even more as time goes on, so we're just going to pretend that the graph is two dimensional so we can fit it on the paper. I'll talk about some of the noteworthy parameters as we go through.

At this point, there was no such thing as copyright. Copying was an unmitigated good for a society. Numerous documents have disappeared from history because they weren't copied, now existing only as obscure references by other works which were successfully copied.

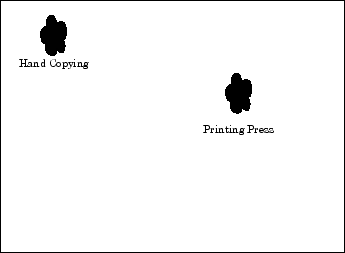

Communication Ethics book part for Gutenberg: Books, Magazines, Newspapers. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Gutenberg's invention added another possibility. By lowering cost-per-copy and time-per-copy by orders of magnitude, it became practical to run thousands of copies of a book and sell each of them at a lower price then a single hand copy would cost. A significant time investment was required to set up each run, though, and that became a new constraining factor. With this new ease of replication, the first rumblings of copyright law began, but it was still a very simple domain, so the laws were simple, at least by modern standards.

Another side effect of Gutenberg's invention was the ability to reach an unprecedented number of people with the same message, because of the sheer number of copies that could be cranked out and delivered to people, rather then requiring the users to come to one of the rare copies of the content. This introduces the notion of the scale of communication; throughout history, we have always treated communication reaching many people quite differently from private, 1-to-few communication. Perhaps one of the most important effects was that such technology made it much easier to spread propaganda. Before such easy printing, propaganda required a network of people to verbally communicate it to the targets; printed propaganda, combined with wide-spread literacy, enabled much smaller groups to effectively use propaganda, which has obvious large effects on the fluidity of a society and the intensifying of common discourse.

As the printing press technology improved, people could set up content for the press faster. The lowering of the cost-to-setup enabled the invention of newspapers (and by extensions all periodicals), which are basically cost-effective periodic books. A new practical content distribution solution appeared, and it too affected the law. People wanted to use this new platform for political purposes, but the centralized nature of the printing press made it easy to shut down if a powerful person disliked what the newspaper said. To counter this, our ethical concepts of free speech and the freedom of the press, initially synonymous, were created. In America we even get this guaranteed as part of the first amendment to our constitution; your country may vary. Printing was a major improvement over hand copying, but it is not a perfect information distribution system. The most obvious problem is the need for physical distribution of the printed materials, which was a major part of the cost. The necessities of daily/weekly/monthly distribution to hundreds or thousands of points for periodicals within a subscribing area required a huge infrastructure investment, and non-periodicals needed some infrastructure too, though it wasn't as demanding. There also need to be enough readers (amount of use) to make it economical to print a given newspaper.

|

You'll note the hand-copying blob is still there. That's because it was every bit as practical to hand-copy things before the printing press as it was after; that almost nobody chose to do it for book-sized communications means that they felt they had a better choice, but the choice was still there. To this day, hand-copying is still the preferred method of communication in many smaller domains, such as jotting down addresses, phone number, and simple notes. Rarely, if ever, does a truly new form of communication completely displace an older one.

Communication Ethics book part for Postal Service. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

The postal service is not often considered as an important advance in the context of intellectual property, but in communication terms it is the earliest example of a information distribution service that was capable of reliably sending a single copy of something from one single person to another single person. On a technical level, the modern Internet functions much more like a fifty-million-times-faster postal system then the more-often used metaphor of a the telephone system, so study of the postal system can potentially provide insight into the Internet as well.

|

Whether or not this pre-dates Gutenberg's press mostly depends on what you call the first "postal service". Ancient China had a decent one, as did Rome, but the scale and universality of the modern postal services places them in a different league entirely. The forces of technology have given postal services the ability to serve more people and allow them to send more types of things, culminating in that greatest of postal service triumphs, commercially viable junk mail.

That may sound funny, but it's serious, too. It takes an efficient system to make it worthwhile to simply send out a mailing to "Boxholder", and have any hope of it paying off economically.

We often ignore the media in which normal people can communicate with other normal people on small scales, because the large ones that we are about to look at look so much, well, larger that the postal service seems like it's not worth considering. It's an important advance, though, and has empowered a lot of political action, direct sales, even entire industries that might have otherwise never existed. And it has caused the creation of its own fair share of laws and principles. The postal service is primarily interested in the transportation of objects more then "information" per se, but laws have been developed for strictly communication-based crimes, such as using the postal service to send death threats.

The key things a postal service needs is cheap, reliable transportation and customers... lots and lots of customers. It needs to be economical to process each of these point-to-point transmissions, and this means you need to either make up the cost in scale as traditional postal services do, or charge your customers higher prices as courier services do.

Communication Ethics book part for Radio and Television. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Radio and television (which at the level I'm covering them are similar enough to treat as two aspects of the same technology) are entirely different beasts.

|

With radio and television, content could be broadcast quickly, even immediately ("live"), to any number of people around the country or the world. The customer had to invest in technology capable of receiving these transmissions, but the general public found radio and television more then compelling enough to invest billions of dollars in. Fast forwarding to today, we find that content with broad appeal can be broadcast profitably, news-type content can be broadcast multiple times per day (entire channels can be dedicated to what is essentially the same hour of content, like CNN), increasing the frequency of transmission to "hours" from "days". "Niche" content can also succeed on a smaller scale, though there is still a relatively high break-even point. The schedules of broadcast content started its intricate dance with the American public as each started to schedule their lives around each other.

Radio and television also broke free of the tyranny of the written word. Radio was one of the first technologies that could handle sound directly (the only competition are records and when you consider that a truly viable technology is a judgement call), and television introduced the even more exciting world of video. It was a long time before this truly strained copyright law, as it was not until the 1980's that the mass-market consumer had any easy, practical means of reproduction of video (via the VCR). Concern about the equivalent of recording from a radio did not exist until the 2000's (the ability to record a digital stream directly from a digital radio), because the mass-market consumer did not have the technology to widely reproduce and distribute a recording with any quality. So we can see that one of the pressures on copyright law is the availability of technology that can produce or reproduce content in a given medium.

Radio and television have their own constraining factors too. The expense necessary to put together even the simplest of professional-quality programs is quite high, which introduces the concept of "cost of entry". In theory anybody could start up a television program or network; in practice, it is vastly more difficult then simply having a printing press print 1000 copies of something. Large transmission towers must be constructed, electromagnetic spectrum must be allocated (extremely limited in television before the advent of UHF), and a large staff must be hired to run this station. Thus, only a limited number of large networks could afford to take full advantage of the medium. This has changed with the wide-scale use of cable, and its corresponding ability to transmit low-quality programs without an expensive transmission tower, allowing "public access" channels (probably only due to Federal mandate, though it's hard to know for certain), but the networks still dominate.

The issue of what we now call "monetization" also appeared, brought clearly to mind by the inability to charge for a physical artifact like the printed word. I am aware of three basic viable models: The advertising-based model, the government tax model used in the UK, and a subscription model where an encrypted signal is broadcast and special decoders must be rented (only feasible relatively recently). This also interacts with the economies of scale; you can't make money advertising if only three people are watching your show, whereas forced taxation allows you to target smaller audiences, as long as you can get government funding. An echo of this problem can be seen on the Internet, except the advertising solution isn't working as well.

Of all of the media discussed in this chapter, I think radio and television have been most deeply affected by the way the industries monetize the medium; one need only compare a day's programming from the BBC or PBS to NBC to see the differences.

Communication Ethics book part for Retail Distribution Networks. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

The retail distribution networks have put content into the hands of "the general public". It has become economically viable to distribute content by having the customer come to some retail outlet that orders many copies of various kinds of content and allows the customer to purchase them and take them home. Much like the postal service, this required the invention of reliable transportation and a large enough customer base to sustain the retail outlet.

And much like the postal service, only more so, this has affected the law by affecting everyone, not just some select group of people with convenient access to content in a copyable form. Perhaps more then anything else, the developments implied by the large scale distribution of content in retail stores, put together with the need for consumer technology such as VCRs to use this content, has brought this down to the level where the decisions made regarding these issues will affect everybody in their day-to-day life. What can I do with this CD? Why can't I send my friend a quote from an electronic book?

The existence of a large-scale distribution network for some kind of content, like sound recordings, tends to imply some sort of standard medium for distributing that content. As more people own players for that medium, the technological pressure to create technology to allow the mass-market consumer to also create content on that medium increases. Thus, a few years after the introduction of the CD-ROM, we get mass-market CD writers. DVD writers arrived even more quickly then CD writers did, relative to the initial introduction of the medium. A large-scale retail distribution method by its very existence tends to create market pressure for the creation of technology that will be capable of allowing the user to, among other things, violate copyright laws.

Communication Ethics book part for Telephones. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Telephones are much like the postal service. In general, telephones are hardly different at all from speaking face to face, and in general there is little special treatment required to handle them. But I do mention them because of the nuisance issues that the law has had to deal with with regard to telemarketers, scammers, and other people abusing the medium for personal gain. We will find the principles inherent in the laws laid down for telephones useful in some other similar circumstances later, most notably the issues surrounding "forced" communication, such as e-mail spam.

We can go ahead and roll fax machines into this category too; their biggest impact on the law was also a "forced communication" issue, where junk spams could cost the recipient money for toner and paper. As such it is very similar to normal telephones, when it comes to the legal issues.

Communication Ethics book part for Final Diagram. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

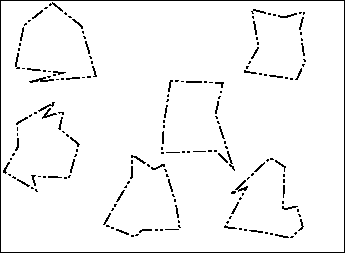

|

Our final diagram (cleaned up for convenience) has a number of isolated splotches, and a large number of candidates for what could be considered to be axes:

- cost per copy

- scale of people reached (one, tens, thousands, millions)

- time to produce per copy

- number of paying customers

- initial setup costs

- transportation demands

- economies of scale

- speed of transmission

- availability of (re)production technology to the mass-market consumer

- cost of entry

- method of monetization

- whether reception is "forced"

There are no connections between these splotches, because there are no "in betweens" which are economically viable. Running a television station does nothing to help you run a postal service, because the postal service has "transportation demands" and "initial setup costs" that the pre-existing television station does nothing to defray. Even the two most structurally similar systems, the phone system and the postal system, are very different beasts, and there's no way to use one to help build the other in any significant way. This is important, because the assumption that each of these domains was independent became an unspoken assumption in the law.

What that means in practical terms is that when a legal pronouncement was made about television ("A given company may only own two television stations in a given market"), it had little or no impact on the other communication technologies.

The separation isn't completely perfect, if you try you can come up with some things that affected multiple types of communication at the same time. But even some of the most basic ethical principles were often defined differently for different media; witness the difference between slander and libel, for instance, virtually identical high-level concepts that differ only in whether they occur in spoken or printed word.

I'd also like to point out that there's a lot more on that diagram then I believe most people are considering since they tend to limit themselves to merely mass media like television and the Internet. By adding in some of the other technologies, we'll find that we can actually find a simpler, more general pattern that applies even after the Internet comes into existence, by focusing not on the technology but on the patterns of communication itself.

Communication Ethics book part for Before Internet - Almost Attained Stasis. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

I believe that in the period between around the 1950's (1940's if you are willing to fudge a bit on the retail distribution issue) and the late 1980's, a time period of thirty to forty years, that there were no major technological developments that truly changed the landscape as described above. This is certainly a bit of a judgment call, as things like the Xerox copier appeared However, other then possible changes in the general legal climate, there is no compelling reason these suits could not have been filed decades earlier and won then., but I'd say that those were refinements to existing law, not truly new stuff. Even when tape technology was introduced, both audio and video, the difficulty of copying analog tapes accurately precluded large-scale copyright violations and the consequent pressure on the law, even if it did prompt the now-notorious Boston Strangler comment by Jack Valenti:

I say to you that the VCR is to the American film producer and the American public as the Boston strangler is to the woman home alone." - Jack Valenti, President of the Motion Picture Association of America, Hearings before the subcommittee on Courts, Civil Liberties and the Administration of Justice, 1982. Transcript available at http://cryptome.org/hrcw-hear.htm.

Thus, for forty or fifty years the law had been revised and refined in Congress and with international treaties such as the Berne Convention, and clarified by our court system, until there was hardly any mysteries left about what was legal and what was not. That's long enough for a complete generation or two of lawyers and lawmakers to come and go; this has the unfortunate effect of convincing people that the current system is the only possible system and there will be no major changes necessary. Veneration of the current system has reached a quasi-religious status, where questioning the current system, or even questioning whether we should continue to strengthen the system (question the "meta-system", as it were), gets one labeled a heretic.

So here's the trillion-dollar problem:

|

Our final diagram has lots of isolated splotches here and there, looking totally independent. Rather then taking the time to truly map the domain of discourse and look at all of the issues in a coherent way, laws and judicial decisions exploited the independence of the media types, and each individual segment got its own laws. The laws were informed by the principles of intellectual property law and certain guarantees of rights, but only "informed by"; deviation was seen as harmless or even good, since it helped match the law to the real world better. Thus, we have created a legal system that in practice consists of a lot of special cases and a very few defining principles.

Despite the inelegance of such a system, it worked, it was well-defined, and while large in size, well understood once you've absorbed all the information on a given topic.

It is truly unfortunate that it did work; it's given us some horrible legal habits.

Communication Ethics book part for After The Internet - Stasis Broken. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

The Internet's effects on law can be understood by looking at this figure (with apologies to those who are printing this out to read it):

|

Here's the "problem": The Internet is extremely powerful technology, and is only getting more powerful. There isn't a single axis of our diagram that hasn't been significantly affected by the Internet. Cost of entry? Believe me, if this was 40 years ago you would not be reading this, because I could never afford to self-publish this physically, and I would simply have never written this. Transportation? It doesn't get any easier then sitting in your own home and accessing the world. Scale? Sometimes people accidentally send email to tens of thousands of people when they intended to only send it to one person; that's how easy large-scale communication is on the Internet.

Remember when I defined information? "Basically, if it can be digitized, it's information"? The Internet is all about the transmission of information. When considered in the context of the rest of the computer revolution, which has digitized everything, from simple words to video to interactive games to, well, everything, the true effect of the ability to transmit information in all of its forms becomes visible: The Internet allows every model to be viable economically, all at once!

Want to create a movie for the enjoyment of you family members (and nobody else) over the Internet? No problem, people do that all the time. Publish music to the entire world? Yep, we can do that too. Write an e-mail to Grandma? Yep. Write an e-mail to every one of the thousands of employees of Intel (Intel vs. Hamidi) almost as easily? Can do.

The Internet in a period of just a few years has taken each of the bubbles that we saw in the previous section and rapidly expanded each of them until they all touch, overlap, and envelop each other. For instance, creating a video for an audience of two is possible because the Internet expands the capabilities of a consumer to have much of the distribution power of a major television network to send someone a video. On the other side, the Internet expands the television studio's viable scales of production, usually limited only to the "ultra-large" scale, to include the ability to make truly economical microcontent available. Similar things have occurred in the radio domain, and entire sites have indeed sprung up in an attempt to make a profit off of this, such as Live365.com, which assists people in creating what are essentially radio stations.

The DMCA (Digital Millennium Copyright Act) is providing another example of this kind of crossover. It is probably safe to say that the DMCA, specifically the anti-circumvention-device clauses, were only intended to protect movies, music, software, and other traditional media. Because it is excessively broad and poorly worded, it has been twisted to prevent people from buying "unauthorized" printer ink refills. On the other hand, through some serious sophistry it has been found not to prevent people from manufacturing compatible garage door openers. To prevent abject absurdity requires extreme effort on the part of the judge, and the result is still far from logically rigorous; instead it smells like an attempt to continue to justify a law even in the face of obvious absurdities.

One could hardly imagine a more thorough way to challenge the traditional communications frameworks. As you recall, the important thing about the diagram I developed in the previous section was that all the sections were isolated, which became an unspoken assumption in the rest of the legal discourse. To put the problem succinctly, the problem with the current legal system is that the foundational assumption that the legal domains are independent is no longer valid, which has invalidated all laws built on that assumption.

We cannot simply patch around this problem, because the system is already a patchwork quilt and you can't continuously patch patches. From day one, laws were made without regard for the other communication domains so contradictions and simple conceptual mismatches between the domains are the rule, not the exception. The very principles upon which the practical system was built have been shown lacking. We must fix the problem at a deeper level.

Communication Ethics book part for Ethical Drift. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

This also explains the ethical drift that has occurred over the last sixty to eighty years. While hammering out the earliest versions of copyright law, it was critical to create an ethical framework for thinking about the issues. But once the system is built, it is easy to treat the system as the goal and forget about the original ethical foundation that it was built on. Forgetting the true foundations of the system was made even easier by the fact that there weren't very many true challenges to the system; adding one more domain may look exciting at the time but the excitement is contained within that domain. It is easy to see with only a little study of the origins of intellectual property that the mistaking of means for ends is nearly complete in current intellectual property law and trends. Only a small fringe group discusses the ethical issues any longer in terms of responsibilities and the basic goals of the intellectual property legal machinery; the vast majority of the discourse is in terms of the rights of the owners, and protection of rights, and often even the ensuring of profit, which is far removed from the original reasons given for our current system.

Communication Ethics book part for Legal Attempts To Deal With The Change. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Let's look at the diagram again, but remove the black which represents viable activities.

|

You'll note that the law no longer covers the complete domain of activities anymore. This presents some major problems, as people such as MP3.com have found out. As a way of branching out, MP3.com attempted to create a business where they would create high-quality MP3 files of thousands of CDs (at the time, it was technically challenging to create a good MP3 from a CD, as evidenced by the notoriously spotty quality of files downloaded from the original Napster), and when people proved to MP3.com that they owned the CD, MP3.com would give them access to the prepared MP3 files. MP3.com figured this would not be an issue because they made the customer prove that they already possessed a legal copy of the song or album they could obtain. The courts found that MP3.com was distributing music files, which requires a license that MP3.com didn't have. Subsequently, that service has been shut down.

This is a perfect example of what the modus operandi of the legal system has been up to this point: To try to extend all of these little circles outward until they cover all the scenarios that are important at the moment. In this case, the courts decided that distribution of music in this manner was the same as distributing new CDs to the customers. Unfortunately, because each of these sets of laws have been designed with any number of hidden assumptions based on the domain that they were created for, there are unresolvable conflicts everywhere these laws overlap.

For instance, consider "sending digital video over the Internet". In early 2000 in Australia, the part of the government charged with licensing television broadcasters briefly considered trying to require anybody in Australia who wants to transmit streaming video over the Internet to acquire a broadcasters license.

Notice that if one only considers the point of view of those familiar with television, this requirement not only makes perfect sense, it shows a good understanding of how the growth of the Internet could affect television. It is probable that an Internet site in a few more years could broadcast streaming video twenty-four hours a day, seven days a week, at television qualities to television-station sized audiences, effectively gaining all the capabilities of a television station. In only a few more years, this capability will exist for everyone. Obviously, if a country wants to regulate what can appear on television, the country will not want to allow such "stations" to bypass the decency laws of that country just because it isn't a "television station". Thus, it was proposed that the bureau should consider requiring streaming video websites to meet the licensing requirements of a television station. Do not think I am mocking this idea; from the point of view of television regulation, this was an unusually forward-thinking idea, especially for the year 2000.

Fortunately, it was rapidly struck down... because when look at this with anything other then a television-centric viewpoint, it quickly degenerates into absolute absurdity. Does Grandma need a full-fledged broadcasting license just to post streaming video of her grandchildren's birthday party for the father who's on a business trip on the other side of Australia?

And flipping it around, can we completely duck the issue of requiring a broadcasting license by not "streaming" video? In places where two classes of law overlap, like in this example where television law is overlapping with personal law, people will attempt to "dance" from one legal domain to another. So, if the law specifically requires streaming video providers to obtain licenses, a provider may decide to delay the video by an hour, create several files containing an hour of content each, and allow the visitor to view those on the web in a non "streaming" fashion, which violates the spirit of the law, but not the letter. In every way that matters, they are still broadcasting and deriving all the benefits thereof (especially if they also just "happen" to schedule all of their content in advance by an hour so this delay gets cancelled), but they've either ducked the law or forced the creation of unwieldy special-case clauses in law or the policies of some entity like the FCC.

And this is only one small case involving two domains! Imagine all the wonderful conflicts you could create with a bit of creativity, many of which have already occurred, somewhere. I could go on for another twenty or thirty pages proving this point with examples, but that would exhaust us both. If there was only a limited number of these intersections, we could cover them all with special cases as we have in the past, but the combinations and conflicts are nearly limitless, with every year's new technology adding a few more.

Here, you can make your own conflicts. Pick one or two from this list: Video, music, spoken word, deriving works from (such as making custom cuts of movies), text, images, software. Pick one from this list: over the Internet, on demand, traded via peer-to-peer, with some unusual monetization system (such as micropayments). Pick one from this list: to one person, to a select group of persons, to a small number of people in general, to the general public. Odds are very good that unless you deliberately select something that already exists ("Video monetized through advertising to the general public" a.k.a. "Television"), you can find cracks in the law. If you select two from the first list ("Music and spoken word over the Internet to a small number of people in general", which is personal radio station DJ'ing, which is a popular hobby in some subcultures), you're certain to find conflicts. And this isn't even an exhaustive list of possibilities, just what I came up with in a few minutes.

I think it is clear that the current approach cannot succeed. We can not address each problem that arises with a stop-gap solution; each such stop-gap adds two more conflicts to the system. Even if we stuck with it long enough to nail down each problem the hard way, and in this era of multi-year lawsuits that would take a very long time, it would still be messy and inefficient, and would still be subject to radical upheaval by a new technology. We are far better off laying a completely new foundation.

Communication Ethics book part for A Communication Model. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

On the one hand, you'd think we all understand what communication is well enough to talk about it meaningfully, since we all do it from a very young age. On the other hand, personal experience shows that people get very easily confused about the communication that occurs in the real world. Most people can't really answer "What happens when you request a web page from a web server?" If you can't even meaningfully answer questions about how things work or what happens, how can you expect to understand the ethics of such actions? And a complementary question, if one must have a post-grad degree in computer science to understand what is going on, how can we expect to hold anybody to whatever ethics may putatively exist?

Since nobody can adequately describe what's going on, the debates almost inevitably degenerate into a flurry of metaphors trying to convince you that whatever they are arguing about is the same as one of the existing domains, and should be treated the same way. The metaphors are all inadequate, though, because as shown earlier, there is a wide variety of activities that do not fit into the old models at all. As a result, the metaphor-based debates also tend to turn into arguments about whether something is more like television or more like a newspaper. There are sufficient differences between things like using a search engine and reading a newspaper to render any metaphor moot, so the answer to the question of which metaphor is appropriate is almost always "Neither."

Before we can meaningfully discuss ethics, we need to establish what we mean by communication, and create a model we can use to understand and discuss various situations with. We'll find that simply the act of clarifying the issues will immediately produce some useful results, before we even try to apply the model to anything, as so frequently happens when fuzzy conceptions are replaced by clear ones. After we're done, we will not have need to resort to metaphors to think and talk about communication ethics; we will deal with the ethical issues directly. As a final bonus, the resultant model is simple enough that anybody can use it without a PhD in computer network topology.

Communication Ethics book part for The Model. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

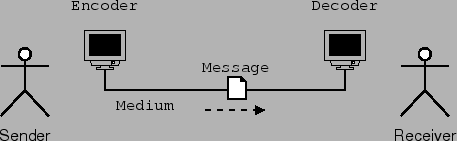

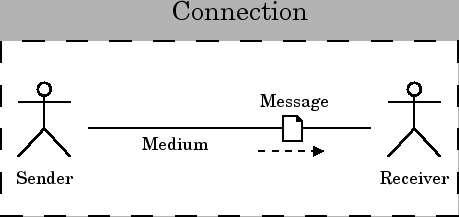

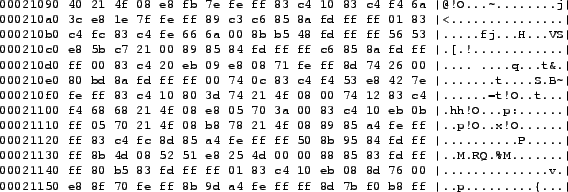

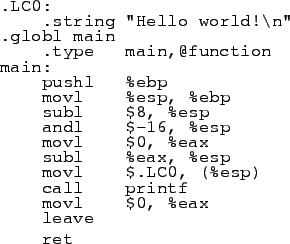

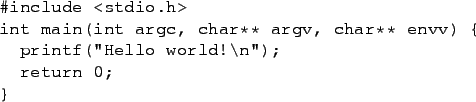

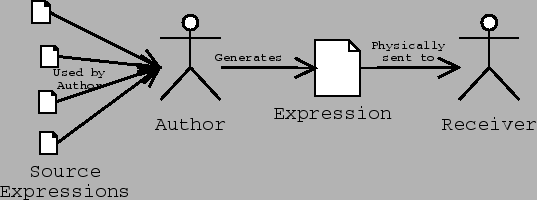

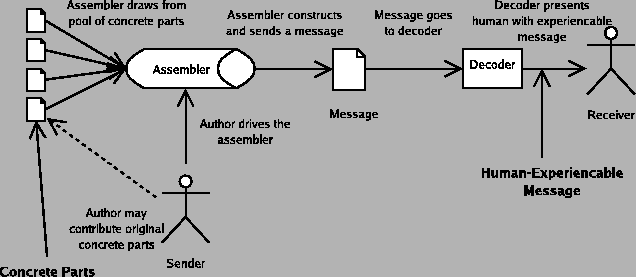

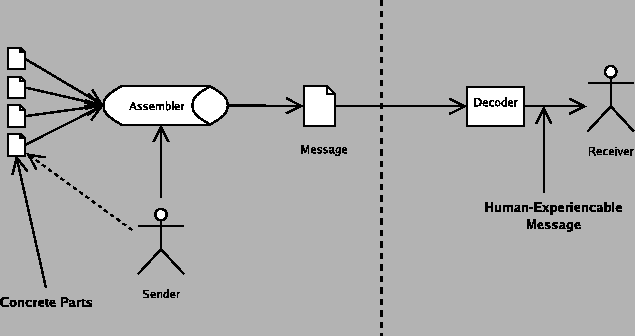

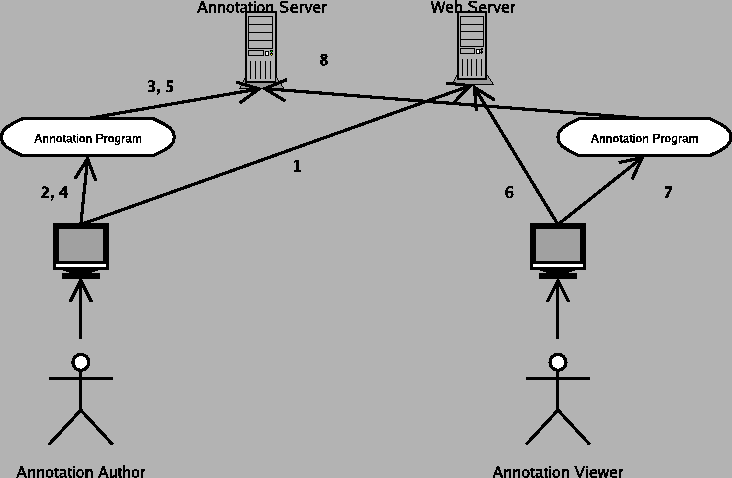

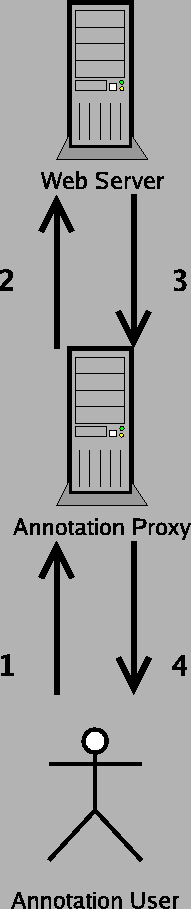

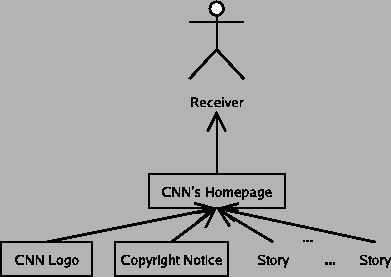

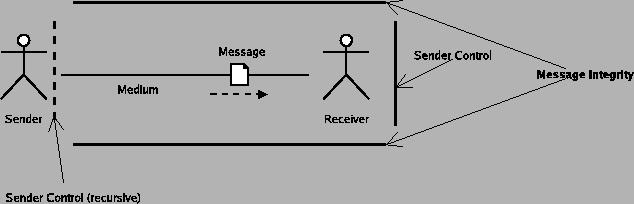

For this model, I take my cue from the Internet and the computer revolution itself, because it is a superset of almost everything else. Telecommunication engineers and other people who deal with the technical aspects of communication have created a very common model of communication that has six components, which are a sender, an encoder, a medium, a decoder, a receiver, and a message, as in figure 10.

|

The parts of this model are as follows:

- Sender: The sender is what or who is trying to send a message to the receiver.

- Encoder: In the general case, it is not possible to directly insert the message onto the communications medium. For instance, when you speak on the telephone, it is not possible to actually transmit sound (vibrations in matter) across the wire for any distance. In your phone is a microphone, which converts the sound into electrical impulses, which can be transmitted by wires. Those electrical impulses are then manipulated by the electronics in the phone so they match up with what the telephone system expects.

- Message: Since this is a communication engineer's model, the message is the actual encoded message that is transmitted by the medium.

- Medium: The medium is what the message is transmitted on. The phone system, Internet, and many other electronic systems use wires. Television and radio can use electromagnetic radiation. Even bongo drums can be used as a medium (http://eagle.auc.ca/~dreid/overview.html).

- Decoder: The decoder takes the encoded message and converts it to a form the receiver understands, since for example a human user of the phone system does not understand electrical impulses directly.

- Receiver: The receiver is the target of the message.

As a technical model this is fairly powerful and useful for thinking about networks and such. However, since the model is for technical people for technical purposes, it turns out that it's actually excessively complicated for our purposes of modeling communication for the purpose of ethics.

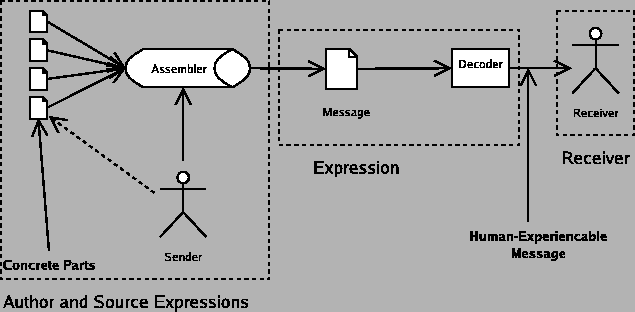

We can collapse the encoder and decoder into the medium, because we never care about the details of the encoder or decoder in particular; it is sufficient for our purposes to consider changes to the encoder or decoder to be essentially the same as changes to the medium.

That leaves us four basic components.

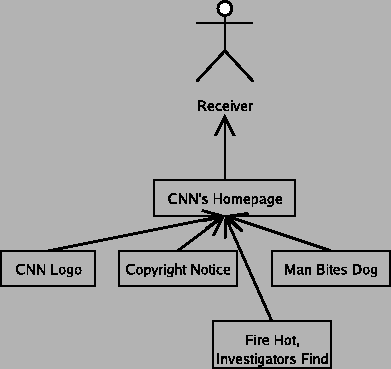

|

The base unit of this model can be called a connection.

- connection

- If there is an identifiable sender, receiver, and medium, they define a connection along which a message can flow. When the sender sends a message, the medium transmits it, and the receiver receive the message.

Note that until the message is sent and recieved the medium may not literally exist; for instance, your phone right now theoretically connects to every other phone on the public network in the world. However, until you dial a number or recieve a call, none of the connections are "real".

A connection is always unidirectional in this model. If communication flows in both directions, that should be represented as two connections, one for each direction.

To send a message across the connection, a connection is initiated by a sender, and the receiver must desire to receive it, excepting sound-based messages which due to a weakness in our physical design can be forced upon a reciever. Either can occur independently; a receiver may be willing to receive a message, but the sender may not send it until they are compensated to their satisfaction. A sender may wish to send a message, but no receiver may be interested in receiving it.

For a given message from a sender to receiver, the "medium" is the everything the message traverses, no matter what that is. If the phone system offloads to an Internet connection to transmit the message part of the way, and the Internet connection is then converted back to voice on the other end, the entire voice path is the medium. It may sometimes be useful to determine exactly where something occurred, but except for determining who is "to blame" for something, all that really matters are the characteristics of the medium as a whole.

Communication Ethics book part for Example. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Let me show you an example of this model applied to one of the most common Internet operations, a search engine query. Let's call the search engine S (for Search engine) and the person querying the engine P (for person). Let's assume P is already on the search engine's home page and is about to push "submit search".

- P (as sender) opens a connection to S (as receiver) via the Internet (the medium). P sends the search request (the message).

- S, which exists for the sole purpose of searching the Internet in response to such requests, accepts the connection, receives the request and begins processing it. In the past, the search engine has read a lot of web pages. It puts together the results and creates a new connection to P, who is now the receiver, using the Internet. It sends back the results.

Technical people will note at this point that the same "network connection" is used, as TCP is both send and receive, so no new "network connection" is ever created. This is true on a technical level, but from this model's point of view, there is a new "connection"; what constitutes a "connection" does not always match the obvious technical behaviors.

On most search engine pages with most browsers, you'll also repeat this step for each graphic on the results page, loading a graphic that's on the page. In this case, the person P is the sender for the first connection, the company running the search engine S is the receiver for the first connection, and the medium is everything in between, starting at P's computer and going all the way to the search engine itself.

This model does not just apply to the Internet and computer-based communication. It applies to all communication. When you buy a newspaper, the newspaper is the medium, and the sender is the publisher. When you watch television, the television is the medium, and the television program station is the sender. When you talk to somebody, the air is the medium and the speech is the message. This is a very general and powerful model for thinking about all forms of communication.

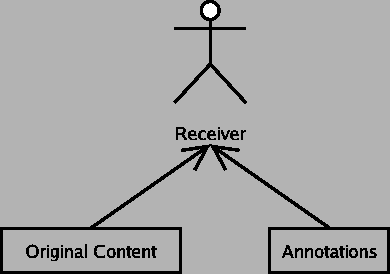

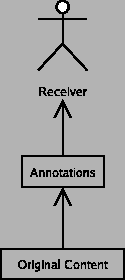

Communication Ethics book part for Chain of Responsibility. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

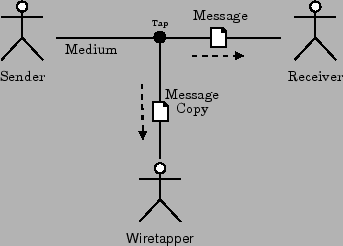

There are many elaborations on this basic model:

- an entity between the sender and receiver catches the message and does something with it. The entity could cache it so future requests are faster, log that a communication occurred, change the message, completely block the message, or any number of other things.

- the communication medium itself may affect or manipulate the message somehow, either according to someone's intent or by accident (transmission flaw), especially as "the medium" can include computers and other such things.

There are any number of ways to expand on those basic elaborations of intercepting the message or manipulating the medium. An exhaustive list of such things would take a long time, but fortunately there is a relatively straightforward way of characterizing all of them. Each possible elaboration consists of some other entity inserting themselves into the connection somehow and manipulating the message between receiver and sender. For every communication made, we can catalog these entities and form a chain of responsibility.

- chain of responsibility

- The listing of all entities responsible for manipulating a message in some form other then pure delivery.

Consider a newspaper story containing a quote from some source and some commentary by a reporter. By giving the quote to the reporter acting on behalf of the newspaper, the source is trying to communicate with the readers of the newspaper story. In the communication from the source to the reader, the newspaper intervenes, probably edits the quote, and adds the rest of the article around it. In this case, the newspaper is on the chain of responsibility for this communication. This matches our intuition, namely that the newspaper could potentially distort or destroy the message as it pleases, and it has responsibilities that we commonly refer to as "journalistic ethics", which among other things means that newspaper shouldn't distort the message.

Being "responsible" for a message means that you are able to affect the message somehow, and are thereby at least partially responsible for the final outcome of the communication. As I write this, millions of conversations are occurring on a telephone. I am not on the chain of responsibility for any of those communications because I have no (practical) ability to affect any of those communications. On the other hand, if someone calls my house and leaves a message with me for my wife, I can affect whether that message is transmitted accurately, or indeed at all. Alas, too frequently I forget to deliver it. Thus, I am on the chain of responsibility for that message, since I have demonstrated the capability of destroying the message before it got to the recipient.

Anybody who can affect the message is therefore on the chain of responsibility even if they have no technical presence on the medium. The biggest, and perhaps only, example of this is a government, which may choose to set rules about all messages that affect all message and thereby have a degree of responsibility for all messages. For instance, the government makes rules about "libel" and "slander", and the government has the ultimate responsibility of enforcing them. Since a government is capable of censoring a message, they are technically on every message's chain of responsibility as a result, though the impact is so diffuse that usually as a practical matter it's not worth worrying about.

The chain of responsibility should consist only of people, corporations in their capacity as people, and "governments" considered as people. Any time it seems like a machine or process is on the chain of responsibility, it is really the person responsible for that machine or process who is on the chain. The reason for this is that a machine or device can not be "responsible" in the ethical sense for anything, since they are not people. Sometimes it is not obvious who that person is, and once again it can be a judgement call exactly who is responsible.

For example, consider a browser cache, which stores content from web servers on behalf of the browser user, so they don't need to reload it every time they wish to view it. Clearly "the browser cache" is on the chain of responsibility of a standard web page retrieval communication, because if it works incorrectly or has stale content, it can prevent the user from receiving the correct message. But since "the browser cache" is (part of) a program, and programs aren't allowed on the chain, who is responsible for the browser cache? The obvious answer is the browser manufacturer, but as long as the cache is implemented correctly, that is not necessarily the right answer. Consider a lawsuit against someone who took content from a web browser's cache and then illegally distributed it. Can the browser maker be said to be involved with this? One could make a case for it... perhaps the cache manufacturer should have encrypted the cached content better so the user couldn't just take it out of the cache. On the other hand, that's not necessarily a very convincing argument because clearly the person who is illegally distributing the content is responsible, and the fact that they got it from the cache merely incidental, as they could have just as easily gotten it directly from the website.

Presence on the chain is not a binary off/on thing, because there are different levels of responsibility. Sometimes it's not possible to strictly determine whether an entity is "on" or "off" the chain. As usual in the real world, there are grays, and thus there is room for legitimate disagreement about how responsible a given entity is in some situations. The most useful question to ask is "How much influence can the person have on the message?" Someone who can silently manipulate the message to say anything they please obviously has more responsibility then someone who can merely block one image from loading on a web page, and can't hide their responsibility. In the caching example, as long as the cache is correctly and honestly implemented, the browser manufacturer has no effective ability to control the messages I see.

Another example: We typically believe that the phone companies or Internet search providers should not examine or modify the messages we use their equipment to send, but just accurately transmit them. In other words, we expect the ISPs and phone companies to stay off the chain of responsibility by refusing to affect the message, despite the fact that physically, our messages travel via their equipment and they could fiddle with it if they chose. This is also a good example of a time when literal physical reality doesn't perfectly match our conception of ethics: If the phone company simply relayed our message, we do not hold them ethically responsible for the contents of the message, event though in a technical sense they are. In existing law we refer to this as "common carriers", entities that simply carry communication and are not responsible for the message, contingent on their not affecting the message.

Communication Ethics book part for Time. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Only in the modern era, through live television and radio, telephones, and the Internet have we achieved effectively instantaneous communication over long distance. A lot of communication is still not instantaneous. Thus, a single "connection" may actually have a long life. When one reads the Code of Hammurabi, who ruled in 1795-1750BC, one is reading a work across a connection spanning nearly four thousand years. It can be instructive to consider the chain of responsibility for the work: The original author, the transcriptionist, the carver, the archaeologist, the translator, the web site host... note that I am not on it, I'm just pointing at the work and have no control over it.

This is one of the reasons I define connections as a unidirectional flow, rather then the more intuitive (under some circumstances) bi-directional flow. "Connections", and more specifically, the "messages" quite frequently outlast their senders. Modelling that as bi-directional is the only way this makes sense.

Communication Ethics book part for Intention vs. Literal Speech . (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

If you read "Hlelo, how are you?", you still understand what I mean despite the typo. (I'm sure you can find some more typos in this work elsewhere for more examples.) But sometimes it isn't so easy to tell what the original message was supposed to be, if the typo or corruption is bad enough. Or sometimes the communicator can't or doesn't say what they mean, or it may not be possible to directly say what they mean in a given medium.

It is always impossible for a receiver to be completely sure they truly understand what the sender was trying to communicate with their message. Rather then opening the topic of whether we can get the "true content" from the message itself, which itself has many large, heavily philosophical books written about it, we will dedicate ourselves to the much simpler task of just trying to make sure that the message itself, the sequence of bits over time, is adequately transmitted from sender to receiver, because that's all we can do.

We will see this come into play later, as we try to determine whether something "really" changed the content of a message or not.

Communication Ethics book part for Fundamental Property: Symmetry. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

We hold these Truths to be self-evident, that all men are created equal... Declaration of Independence

Probably the most important and perhaps surprising result of this analysis is a re-affirmation of something that we all should have understood all along: There is no intrinsic ethical asymmetry in the communication relationship. There is no intrinsic value in being the sender over being the receiver, or being the receiver over being the sender. Indeed, every person and legal fiction (corporation, government, etc.) that ever communicates in any fashion does so as both a sender and a receiver at some point.

I mention this to explicitly contradict the subtle, almost subconscious insinuations by large content owners that they have some sort of ethically advantageous position over consumers that should translate to various special privileges. It can be the case that the sender has something the receiver wants, which puts the sender du jour in a position of power over the receiver, but this is an economic position of power, not an ethical one. It is these relationships that drive the so-called "intellectual property" industries. At any moment the receiver may simply decide not to desire the sender's message, whatever it may be (music, movies, newspapers, etc.), and the economic power the sender has is gone. To the extent this remains a purely monetary concern, the symmetry property is maintained, because after all, the sender desires the receiver's money, too. Capitalism is negotiating the level of desires in such a way that business occurs and money and goods flow.

An example of such a "special privilege": Levies on blank media based on the assumption that the some of the media will be used to illegally copy content, which are paid to the certain large companies who own content. The right to charge what is in every sense a tax is granted to these companies simply because they own content, regardless of whether a given purchaser will actually use if for illegal copying. We consumers don't even subsequently receive the right to place whatever we want on this media based on the fact that we have quite literally already paid for it (at least in the US), which might do something to restore the symmetry; we can still be prosecuted for "piracy". So media companies receive these fees not because it is part of a mutually beneficial bargain, not because it is part of some general mechanism available to all senders, but because they enjoy special, asymmetrical privileges not available to the rest of us.

It is ethically dangerous to promote one person or entities interests over another, for much the same reason that the authors of the Declaration saw fit to put "all Men are created Equal" right at the top. Once stipulated that one entity is superior to another, history shows us time and time again that the leverage is used to gather just a bit more power, and a bit more, and a bit more, and so on and so forth until the inequity is so great the inferior entity rebels in some manner. The authors of the Declaration of Independence were quite familiar with history, and they found this so clear they called it self-evident. If you don't agree that this is bad, I really don't know how to convince you; this is axiomatic.

But beyond the basic argument from "self-evidence" that I just gave you, there is a deeper reason this property must hold: Because any entity is both a sender and a receiver, any "special" treatment accorded to one side must paradoxically be accorded to the other to be at all consistent. This can be hard to grasp, but perhaps the best example is the Berman-Coble bill (see Freedom to Tinker's coverage), which would grant a copyright holder special power in enforcing their copyright. The music industry desires this power, yet when faced by the actual bill, it occurred to them and many others that the bill could equally well be used against them by other copyright holders ("Hacking the Law"). And suddenly, the bill looks much less attractive... You can not grant special concessions to either side of the communication relationship, because those same privileges will turn around and bite back in the next minute as roles reverse. Only an entity which only consumes or only produces can afford this sort of thing, and it is really hard to imagine such an entity; even the mythical "pure consumer" expects that at least in theory, if they choose to communicate they will have free speech, copyright protection, and all of the other reasonable things one expects to protect one's communication.

A couple of other examples: Software companies trading demographic information about their customers like baseball cards yet trying to block the consumer equivalents, such as performance benchmarks of the software (see the UCITA provisions). I acknowledge as one of the counterexamples to this symmetry principle the government's occasional need to keep information classified, but we have the Freedom of Information Act too, showing the balance is merely tipped, not broken.

In some situations, one may voluntarily agree to forgo the symmetry and agree to some set of constraints imposed in a contract by another entity. This exchange of rights is what lies behind our contract law. However, it is unethical to require someone to forgo this symmetry without compensation. Due compensation is a big part of contract law, and I remind you I use "law" here in the sense of "applied ethics"; in theory, a contract is not valid unless both sides receive something of value. It is a judgement call exactly where the line is drawn. You will probably be unsurprised that I consider the actions of music companies unethical at this point, increasingly requiring that the user commit to not performing actions acceptable in the past (such as making personal copies), and yet charging the same amount or even more as was charged historically for the same goods.

Sorry to beat on the music industry, but they have been the most aggressively honest about their intentions regarding these issues. They aren't the only group I disagree with, they just provide the most vivid illustrations.

Communication Ethics book part for A Natural Balance . (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

This symmetry has always been with us; as I mentioned in Ethical Drift, we've forgotten after so many years of the current status quo. A nice side effect of re-recognizing the symmetry of communication, that all people have equal rights to communicate, is that it provides us a nice balancing point. This principle provides a natural way of examining the relationships between various entities and considering how ethical they are. Is one side elevating itself over the other? Is the other side justly compensated for this elevation? (Mere compensation is not enough; if you paid me a penny for forty hours of work, you are unjustly taking advantage of me, even though I am "compensated".) Is a larger entity using its size abusively? Just this one simple, nearly-forgotten principle has a lot of resolving power when the proper questions are asked.

Communication Ethics book part for Fundamental Property: Only Humans Communicate. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

You remember where I first showed the communication model I'm building? See the people on each side of the connection? One must never forget that communication only occurs between people (and corporations in their capacity as people). This may sound like a strange thing for a computer scientist to say, but it is vital to not get distracted by the technology. Computers are null entities in ethical terms. Only how people use them matter.

This is critical because it is so easy to get sidetracked by the technology, but the tech just doesn't matter, except inasmuch as it allows and enables communication among humans. If a computer just randomly downloads something for no human reason (say, some weird transient bug due to a power spike) and it will never be seen by a human, it really doesn't matter. If someone downloads a music file from a fellow college dorm resident and immediately deletes it, it may be technically illegal (in the "against the law" sense), but ethically I'd say that's a null event. If anything occurs that never makes it back to a human being at some point, who really cares?

It is worth pointing out that by and large, current law sees things this way as well. One does not arrest a computer and charge it with a crime (exempting certain cases created in the War on Drugs, which is beside my point here).

The claim that computers can violate the copyright of software by loading into memory from the disk (necessitating a license that permits this act)? As stupid as it sounds. Who cares what a computer does? The only actions that matter are those performed by a human. Media are just tools, they have no ethical standing of their own. In fact, a human never experiences any copy of any communication located on a hard drive. The only copy that matters is the one the human is actually experiencing, which are the actual photons or air vibrations or whatever else used to "play" or "consume" the media.

Only people matter.

An example to illustrate the point: Suppose a hacker breaks into a computer and installs FTP server software on the computer, allowing it to serve illegally copied software and music. Suppose that four days later, the computer owner notices and takes immediate action to shut the FTP server down. During those four days, the computer may have served out thousands of copyright violations. Ethically, can we hold the computer, and by extension, the computer owner responsible? No! We should hold the hacker responsible, not the computer. Only humans communicate, and the computer owner was not even aware of the offending communication, and took no actions to enable it. His or her computer was being used in communication as a medium by completely different senders and receivers. Ethically, the owner was an innocent bystander to the software piracy.

Note this scenario happens daily, and to the best of my knowledge nobody has ever been prosecuted for being hacked and having an FTP server run on their machine. This could change at any time...

Communication Ethics book part for The Key to Robustness: Follow The Effects . (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

It turns out this fundamental property is the key to robustness. If we had to pay attention to the actions of every computer and router and device between the sender and the receiver, we'd never be able to sort out the situation with any degree of confidence. The modern Internet is quite complicated, and some innovative and complicated ways of communicating have been developed. As we try to apply older models to these issues, the complexity explodes and we are left unable to determine anything useful about the situation.

But we don't need to worry about the actions of every router and program between the receiver and the sender. All we need to worry about are the results, and who is on the chain of responsibility. The technology is unimportant.

For instance, recall the example in chapter 2, where I talked about the Australia considering requiring licenses for streaming video over the Internet. A bit of thought revealed the complications inherent in the issue: What if I don't stream, but provide downloadable video? What if I send chunks that are assembled on the user's computer and claim I never actually sent any actual video, just some random chunks of numbers? What if I just want to stream video as a 1-to-1 teleconference? If you define the problem in terms of what the machines are doing, then any attempt at law-making is doomed to failure, because there's always another way around the letter of the law. Instead, this ethical principle says follow the effects. If it looks like television, where you are in any way making video appear to many hundreds or thousands of users reasonably simultaneously, then call it television and license it. I don't care if you're mailing thousands of people CDs filled with time-locked video streams, bouncing signals off the Moon, or using ESP. After all, Australia really only cares about effects; the tech is just a red herring. On the other hand, Grandma emailing a video, or 1-to-1 teleconferencing, is obviously not television. The television commission should then leave it alone.

Of course there would be details to nail down about how exactly one defines "television" (remember all those axes in the communication history section?), but that's what government bureaucracies are for, right? "Scale of people reached" is a natural axis to consider in this problem. I'm not claiming this provides one unique answer, but actively remembering the principle that only humans communicate provides a lot of very important guidance in handling these touchy issues, and makes it at least possible to create useful guidelines.

In fact, I submit that no ethical system for communication can fail to include this as a fundamental property. Not only is it nonsensical to discuss the actions of computers in some sort of ethical context, I think it would be impossible to create a system that would ever actually say anything, due to huge number of distinct technological methods for obtaining the same effects. Consider just as one example the incredibly wide variety of ways to post a small snippet of text that can be viewed by arbitrary numbers of people: Any number of web bulletin board systems, a large number of bulletin board systems over Telnet, Usenet, email mailing lists with web gateways, literally hundreds of technological ways of producing the same basic effect. Yet despite the near-identical effect produced by those technologies, if we insist on closely examining the technology, each of those has slightly different implications for who is hosting the content, where the content "came from", who is on the chain of responsibility of a given post, etc. Are we going to legislate on a case by case basis, which even in this small domain is hundreds of distinct technologies? The complexity of communication systems is already staggering, and it's not getting any easier. On the whole, we must accept this principle, or effectively admit defeat.

Communication Ethics book part for Fundamental Property: Everything Is Digital. (This is an automatically generated summary to avoid having huge posts on this page. Click through to read this post.)

Everything is digital. There is no analog to speak of; analog is an artifact of technology, with little to no discernable advantages over digital, except for generally requiring somewhat less sophisticated technology to create and use.

Why is this true? Because digital subsumes analog into itself: Everything analog can become digital, with all the copying and distribution benefits thereof. The Internet even provides us with a way to digitize things that might seem like too much effort to digitize by allowing people to easily distribute the workload. Even the daunting task of digitizing centuries of books has been undertaken, and by now, any book that is old enough to be out of copyright, and is famous enough for you to think of off the top of your head, has been digitized and is available at Project Gutenburg. Other examples:

- The Project Gutenburg Distributed Proofreaders convert public domain books into electronic text by parceling out the various necessary actions to many people. One person scans in the book, a page at a time, which currently is the hardest thing any single person has to do, though there is some hope that robotics can take over this job in the future. The pages are run through an OCR software program, which is much better then nothing but is very noticably inaccurate. The Distributed Proofreaders project uses the web to then present each page and the results of the OCR to a human, who corrects the errors the computer has made. The page and the corrected text are then presented to a second human for further verification, and finally one person knits all the contributions into a whole, coherent e-text.